Reframing The Future of Free Speech in The Online Era

Can free expression survive if we’ve lost the freedom to choose what we hear?

The views expressed in this guest contribution are those of the author and do not necessarily represent the views of The Future of Free Speech.

Debates about free speech online are misframed. Instead of focusing on what content should be removed or censored, we should instead focus on restoring the underlying social and technical structures that enabled free speech to function well in the past. A crucial part of that structure is freedom of attention (which I have previously referred to as freedom of impression, as a complement to freedom of expression). That is the ability of individuals and communities to choose what they listen to, rather than having platforms decide for them.

Humans are deeply social creatures who speak to be heard and understood by others. We make sense of the world, flourish, and find resilience collectively. Free speech is not just a matter of individual expression. It’s a continuous cycle: ideas are formed, expressed, and shaped through social interaction, then fed back into human minds.

The scale and speed of online discourse, and how interactions cascade to influence one another, make it essential to understand that freedom of expression for all speakers, across diverse communities, is feasible and prosocial only when there is freedom of attention for listeners. It’s also only workable when that expression is supported by healthy processes of information sharing and assessment that function as a social mediation ecosystem. Censorship is unnecessary and counterproductive, as it harms the rights of both speakers and listeners, and weakens those processes of collective intelligence.

“Fighting The Last War” — Renewing The Framing of Free Speech for The Online Era

We are now engaged in fierce debates over how to address the aspects of free speech that are not in need of repair. Demands for “freedom of expression” have devolved to zero-sum battles over which side gets censored. Most people want platforms to take some responsibility for reducing harm, but when content policy teams focus on removing “lawful but awful” content from the public sphere, it is neither effective nor desirable!

Instead, it entrenches the inherent dilemmas of removal as a form of censorship:

The problem is an intrinsic matter of viewpoint: “free speech for me, but not for thee.” Who decides what viewpoint is true or good?

It is also a practical matter of power: how can we find nuance and balance between top-down control (rules imposed by governments and platforms) and bottom-up freedoms (users and communities setting their own norms and practices)?

There are more effective and win-win remedies than removal, as many have argued.1 We forget that we traditionally protected our attention by walking away or changing the channel. Speech may, indeed, have social consequences, but that is no justification for deplatforming.

The Core Reframing: Thought as A Social Process

Online technology surfaces a neglected question: “Who controls your attention?” Before the digital age, people naturally had control over what they paid attention to. We were free to walk away from speakers; free to associate or not. Now, platforms control what appears in our feeds and from whom. Instead of choosing for ourselves, we are fighting over how these centralized platforms make those choices for everyone – because they do it badly.

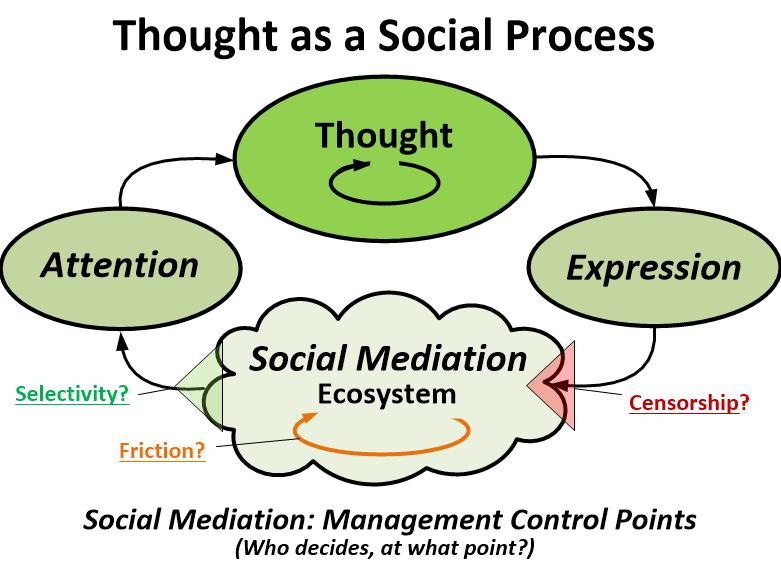

Consider this diagram of Thought as a Social Process.

Thought and Expression happen at the individual level. Current battles are for or against censorship of expression, but expression is just the starting point of the full cycle of thought as a social process.

Social Mediation is the rich, nuanced stage where others process and interpret what’s been expressed, pass it on, and feed it back (or not). When expression is censored, it nips this cycle in the bud, as governments, platforms, or institutions prevent certain expressions from ever reaching the open social mediation layer.

Historically, social mediation occurred through the natural dialogue of communities, institutions, and shared cultural spaces, often guided by trusted intermediaries such as clergy, editors, local leaders, and other influential figures. This layer introduced natural forms of friction through reflective feedback, social norms, and editorial choices over the use of limited channels. These processes encouraged both individual and collective reflection, allowing reputations to form and circulate, thereby slowing the spread of raw information and adding context before ideas reached wider audiences.Attention is our selective awareness of what comes back to us: which of those mediated ideas are impressed on our attention to shape new thoughts. This is where freedom of attention comes in. This is the listener’s right to decide what should feed into their attention. Historically, people exercised this simply by choosing who to listen to or what to tune out. That was how word of mouth had worked for millennia. Expression to one or a few, then propagated more widely in successive cascades of cycles – if deemed worthy by enough listeners.

Broadcast technology and the emergence of mass media changed that dynamic. Control of social mediation shifted from the soft power of influential intermediaries to the imperious gatekeepers of costly and limited channels, which constrained freedom of expression (as only partially balanced by policies such as the Fairness Doctrine in the US and impartiality rules in the UK, which required broadcasters to present contrasting viewpoints).

But now online social media shifted dialogue back to a word-of-mouth style of person-to-person propagation, driven by individual posts, likes, shares, and comments – but algorithmically, at dramatically increased speed and scale, connecting anyone to anyone globally.

The problem became that “a wealth of information creates a poverty of attention.“ Online feeds became a “firehose” of information, rushing far too fast to drink from. As a result, social media feeds now function as attention agents to help listeners manage what they see by ranking and filtering content to allocate their attention.

The “original sin” of the ad model incentivized platforms to build attention agents that compose and optimize feeds, not to serve users but to maximize engagement for ad revenue. That oligopolistic capture of our attention now threatens democracy and our very sanity. Dominant platforms have concentrated power in a few opaque algorithms that are easily manipulated to amplify chaos rather than distill collective wisdom. This also bypasses and drains vitality from the social mediation ecosystem that previously constructed “social trust,” which is essential to democracy and is now being lost.

Compounding this, advanced AI is merging with social media, making control over our attention an even more opaque problem.

We can no longer maintain freedom of speech unless our attention agents protect our freedom of attention. This is an aspect of the freedom of assembly or association recognized in the First Amendment and similar human rights formulations. It works because “harmful” speech does little harm if not propagated widely – and without human social processes, we cannot determine which speech we should propagate.

Back to The Future of Free Speech — Taking Back Control of Our Attention

Barriers to reform include the fact that platforms have little incentive to yield the control that is so profitable, or to serve smaller or demanding communities — and that few people have the skills and patience to manage their feeds directly. What is needed are trusted and prosocial intermediary attention agent services that we can independently select and combine for ourselves, to support the needs of our communities. Open interoperation protocols, or “middleware,” can make this possible by sitting between users and social networking platforms.

The obvious pathway to regain control of our own attention is to open up today’s dominant platforms, for example by expanding on the proposed ACCESS Act, to “[a]llow users to delegate trusted custodial services…required to act in a user’s best interests through a strong duty of care, with the task of managing their account settings, content, and online interactions.” Currently, that path seems stalled by platform resistance and government dysfunction.

Another way to regain control of our attention is through a growing grassroots movement to build open social media ecosystems. This emerged with the Mastodon-protocol-based “fediverse” and is now gaining momentum with the more deeply interoperable Bluesky-protocol-based “ATmosphere” that already serves some 40 million users.

What we see now is just embryonic – green shoots of a revitalizing sociotechnical ecosystem. The power lies in the open ecosystem of attention agents, built on these protocols, that will enable people (and their communities) to filter and shape their feeds for themselves, rather than relying on platforms to do so. Some misunderstand Bluesky as just “lib Twitter,” but that is just part of a broader shift toward user-controlled filtering and community-shaped information flows that can enable a user-selected diversity of “vibes”.

One early example is BlackSky, a community-mediated feed service drawing on human and machine judgment to filter Bluesky feeds that are protective of Black people. Grassroots initiatives like Free Our Feeds, the Social Web Foundation, Project Liberty, and Protocols for Publishers, and feed service tooling providers like Graze, are working to catalyze development of this open “pluriverse.” Meanwhile, reactions to the dominance of American platforms are fueling the EuroStack/EuroSky initiatives to create new and more open infrastructures to serve other communities.

Either way, a key objective is not only to enable our freedom of attention, but to protect it from “enshittification” – the extractive practices that arise when users are captives of entities that control not only their attention, but their connections and data. To prevent that, these new initiatives aim to make “exit” (switching to alternative services) easy, thus keeping real competition in play.

Shifting moderation from top-down removal to more bottom-up, community-mediated management of attention raises concerns, such as the creation of echo chambers and the responsibilities to protect vulnerable users. As I will address in a companion piece, restoring reliance on community-level social mediation, supported by “least-restrictive” ways of adding “friction,” can make those issues manageable in democratic ways that support the fullest practical levels of free speech.

Conclusion

We urgently need a whole-of-society effort to reframe how we understand free speech in this new digital era, and to reshape social media and AI so they support and augment both individual freedom of attention and healthy prosocial mediation. This is not just technology, but an evolving sociotechnical process that includes:

Improving public understanding of how and why free speech works in more positive ways, without the need for censorship, so society can find a stable path between tyranny and chaos.

Reducing censorship, removal, and deplatforming as rapidly as is practical.

Creating space for innovation by opening markets for supportive and open media-tech infrastructure, regardless of whether dominant platforms participate.

Supporting the growth of alternative attention agent tools and services like the Bluesky and Fediverse ecosystems that are open, flexible, and extensible, even if they’re still developing.

Fostering and nurturing the essential interplay of both individual freedom and reinvigorated communities and institutions in online discourse.

My thanks to the many people who have helped me formulate these ideas, and to Jacob Mchangama and Francis Fukuyama for encouraging me to write this and a planned companion piece to go deeper on revitalizing humanity’s social mediation ecosystem (and for invitations to help organize a major project on middleware, and to the Global Free Speech Summit).

Richard Reisman is a non-resident senior fellow at the Foundation for American Innovation, contributing author to the Centre for International Governance Innovation’s Freedom of Thought Project, and a frequent contributor to Tech Policy Press. He was a principal author of “Shaping the Future of Social Media with Middleware” based on a symposium he helped convene for FAI and Stanford Cyber Policy Center, and blogs on human-centered digital services and related tech policy at SmartlyIntertwingled.com.

For example, Goldman, Gillespie, Stray, and Riley and me